Latency vs. Throughput vs. Bandwidth

Latency, throughput, and bandwidth are the core metrics that describe the performance of a network or distributed system.

Together they determine how fast the first byte arrives, how much data you can move per second, and the maximum capacity of the path.

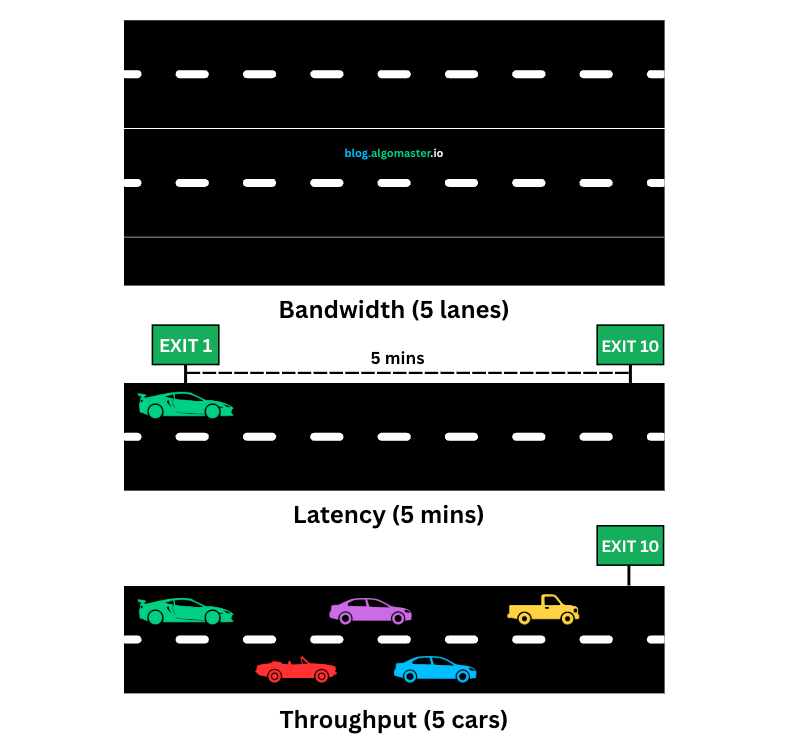

Let’s understand them with the highway analogy.

Bandwidth: The number of lanes on the highway (e.g., 5 lanes). This is the maximum physical capacity of the road.

Latency: The time it takes one car to drive from Exit 1 to Exit 10 at the speed limit with no traffic.

Throughput: The total number of cars that pass Exit 10 per hour.

Now, consider a traffic jam (congestion).

The bandwidth stays the same. The road still has 5 lanes.

The latency (travel time) rises for every car because travel takes longer.

The throughput (cars per hour) drops because fewer cars clear the exit per hour.

Networks behave the same way. Congestion increases latency and reduces throughput even when raw bandwidth does not change.

Lets now explore them in more detail.